What's the best subsonic client for Android?

I've been using Ultrasonic for a while now, and I have mixed feelings about it. It does the job, for sure, but it's also been a pain. The UI isn't super intuitive, it's missing the ability to rate music, and has had a bug since Dec 2023 which resets playback, and duplicates the now playing queue whenever you rotate the screen.

I finally got annoyed enough to see what else is out there! So...

What else is out there?

I looked at all 5 different subsonic API clients that were available to download from F-Droid (Funkwhale doesn't use the subsonic API, DSub hasn't been updated since 2022)

I've heard good things about symfonium but it's not free nor open source, so I feel like I have to at least investigate other options before I go down that road.

What makes a good subsonic API client?

This obviously varies from person to person, which is probably why there are so many different clients out there! But I have a pretty long list of features that I'd like to have, and some are more important than others. So, I wrote down which features I felt were nice while I interacted with the various apps for a while and came up with this list, roughly sorted by importance.

- MPD Style Queuing

- Scrobbling

- Playlist Management

- Lock screen widget functionality

- Starring

- Sharing

- Rating

- Intuitive Interface

- Download Songs

- Mix/Radio/Autoplay Function

- Seamless Offline Playback

The first thing you might notice is that "playing music" isn't one of the features. There's a few things that I take for granted when I install a music client. If you can't play music, skip songs, search, etc. then you aren't even worth considering. The above features are all supported at varying levels by the applications in question.

The next thing you might notice is that it isn't obvious what some of those things mean exactly. That's fair! They're meaning isn't super obvious, but it'll make more sense to show what's what first then explain them afterward.

The Big Table O' Features

| App | MPD Style Queuing | Scrobbling | Playlist Management | Lock screen widget functionality | Starring | Sharing | Rating | Intuitive Interface | Download Songs | Mix/Radio/Autoplay Function | Seamless Offline Playback |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ultrasonic | Yes | Yes | No | Prev, Scrub, Star, Shuffle, Pause | Albums, Songs | Yes | No | No | Yes | No | No |

| YouAMP | Songs only | No | No | None | Artists, Albums, Songs | No | No | Yes | No | No | No |

| Tempo | Yes | Yes | Yes | Prev, Next, Scrub, Pause | Artists, Albums, Songs | Yes* | Songs | Yes* | Yes | Yes* | No |

| subtracks | No | Yes* | No | Prev, Next, Scrub, Pause, Stop | Artists, Albums, Songs | No | No | Yes | No | No | No |

| DSub2000 | Yes | Yes | Yes | Prev, Next, Scrub, Dislike, Star, Pause* | Artists, Albums, Songs | Yes* | Albums | No | Yes | No* | No |

This table tries to capture which apps support what and to what degree. Unfortunately, some of these items have varying degrees to which they can be supported, so it's not obvious how to rate them all, but the color approximates how good of support the app has for the feature.

Now I'll explain what each of these categories mean so that it's clear, and explain what any asterisks mean in the table.

MPD Style Queuing

MPD is an old music player. The way that you play music in it is by adding songs to "the queue". So to play a playlist you just copy over the songs from the playlist to the queue. To play an album you copy it over to the queue. If you want to play two albums in sequence you add first to the queue, then add the second to the back of the queue. It's very intuitive and very powerful. This is also how many steaming services and music apps work nowadays, but I have seen ones that don't work like this. I'm pretty sure iTunes used to only let you play playlists that you build, albums, or individual songs. That does not work with the way I listen to music. This is my most important feature for sure.

YouAMP: Only allows you to queue songs, not albums and subtracks doesn't allow queuing of any kind other than playing an album as far as I could tell.

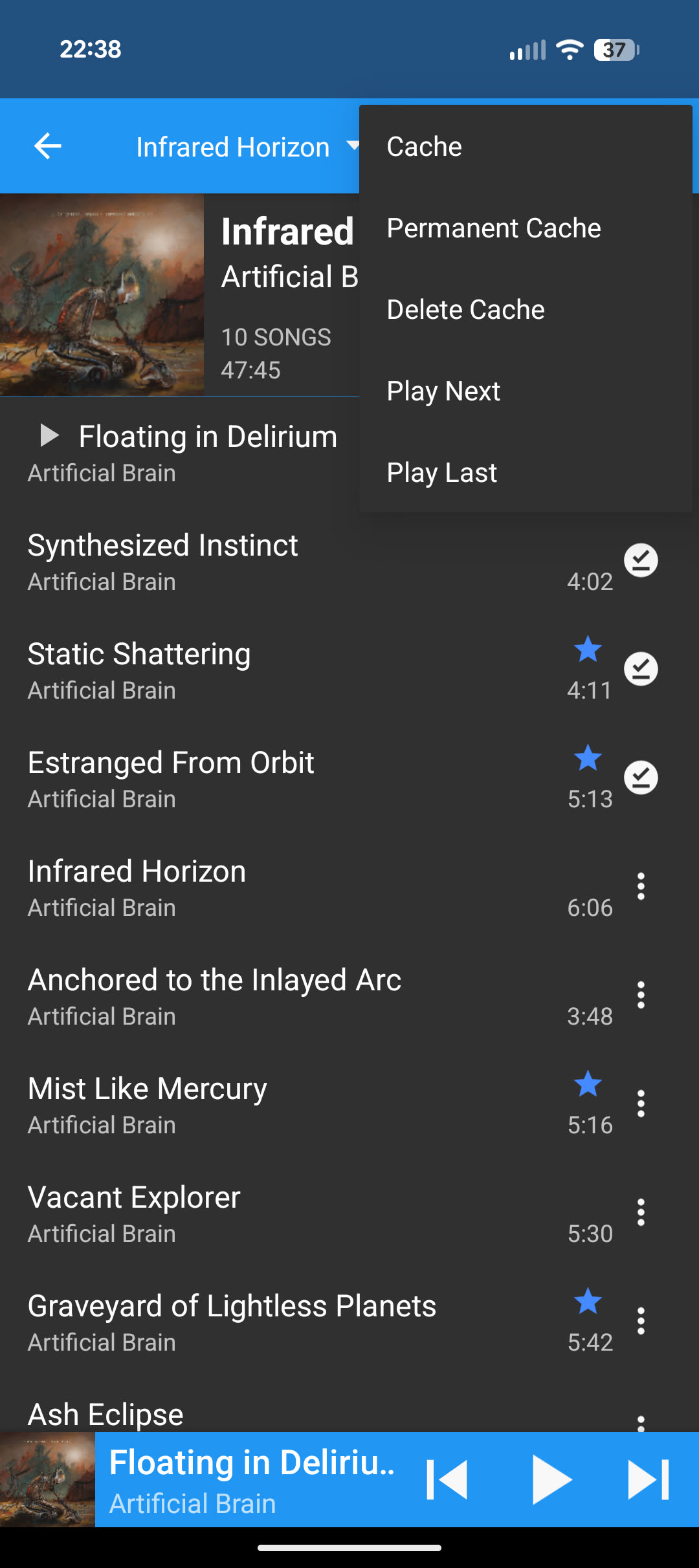

DSub2000: It's probably worth noting that you have to enable an option to

allow you to queue an album - Enabled Settings > Appearance > Play Last.

Scrobbling

Scrobbling is a term for tracking how many times you've played a song. I scrobble to listenbrainz, but other people might scrobble to last.fm. Keeping track of what I listen to is somehow about as important to me as listening itself. It's a bit obsessive.

YouAMP: I wasn't able to find a way to enable scrobbling

DSub2000: I couldn't find a way to disable it. Every other app allows you to disable it, but I personally don't care about that. subtracks: It works, but it scrobbles as soon as you start the song, so you don't get a "now listening" on listenbrainz, which is lame.

Playlist Management

This is pretty self explanatory, albeit a little surprising that it isn't totally standard behavior. I want to be able to create and delete playlists, as well as add, remove, and reorder songs in a playlist.

Lock screen widget functionality

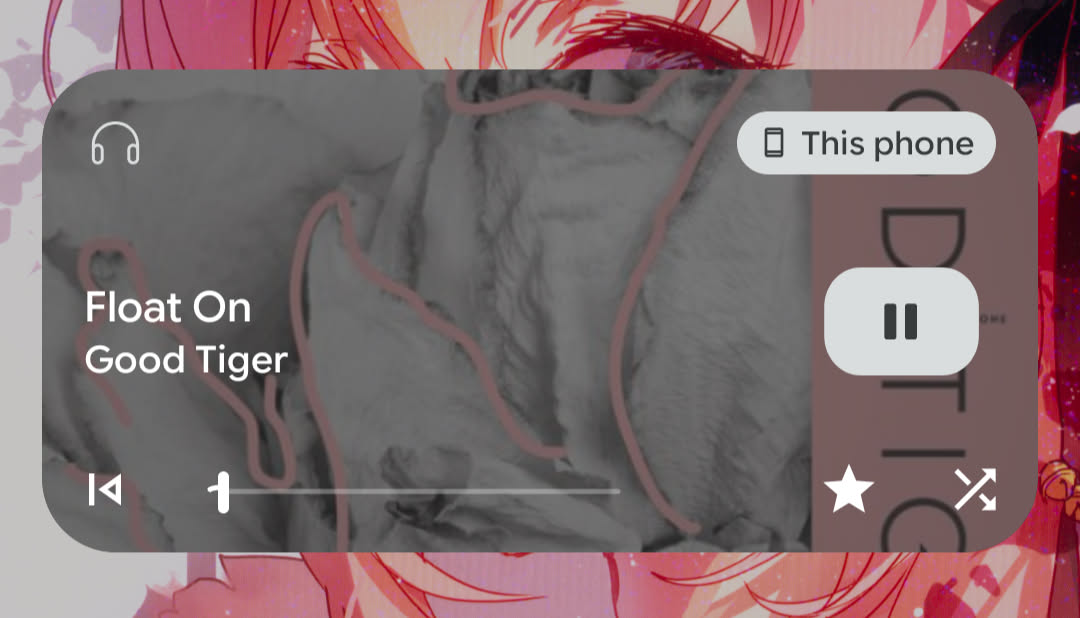

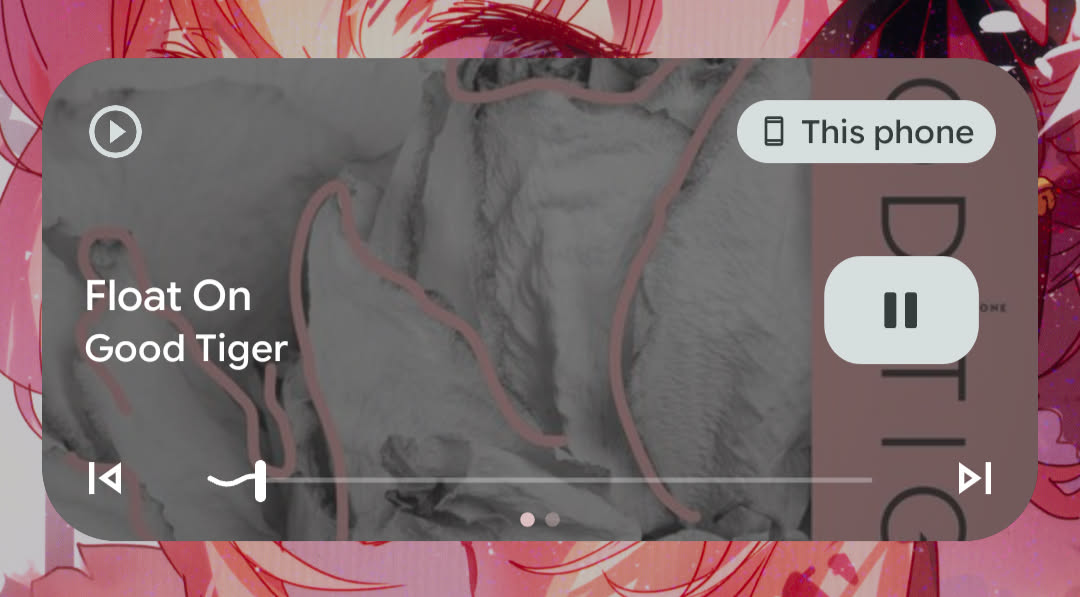

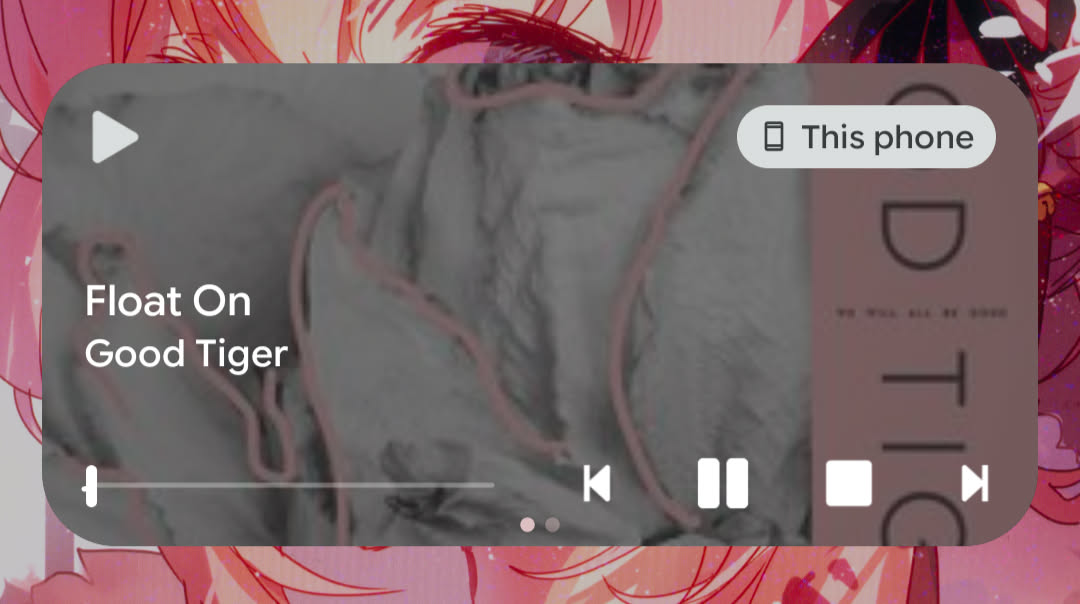

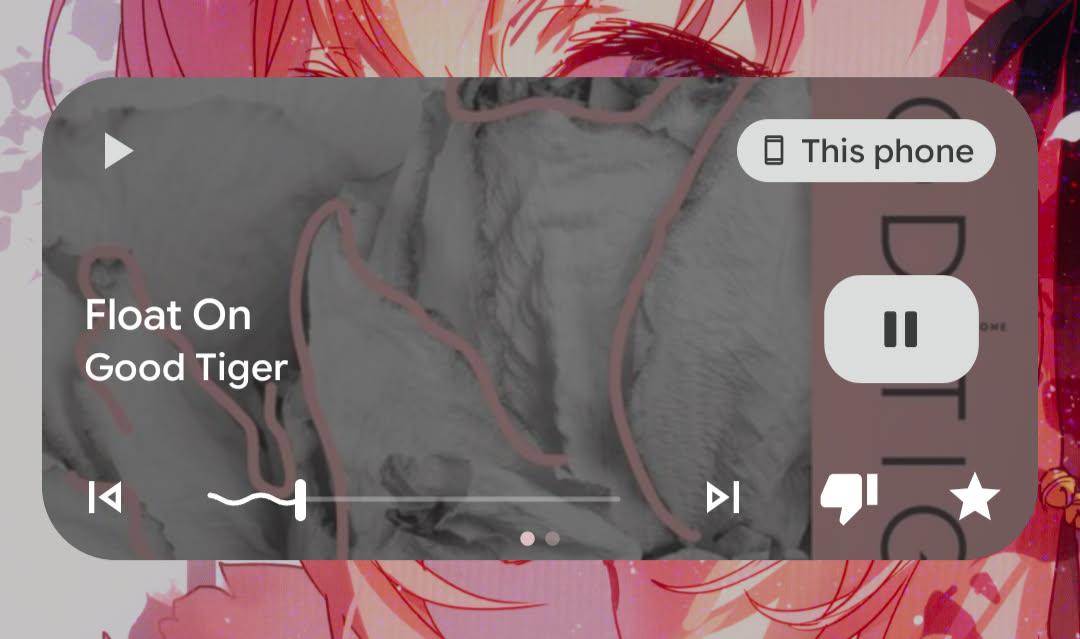

Here's the images of the lock screen widgets. They mostly look the same other than what buttons they expose, except for YouAMP which doesn't have one.

Ultrasonic

Tempo

subtracks

DSub2000

The lock screen widget is very important to me. I get most of my music listening done in the car. I don't want to have to mess around with unlocking my phone or navigating through the app to do some basic things such as skip, or star a song. The color is based on those two buttons that I care about - star and next.

DSub2000: This one seems like it should be the best by a lot, but there's currently an issue where pausing closes the widget so you can't start playing the song again without opening up the app again. Essentially, it functions as a stop button which makes it unuseable in my opinion. DSub2000 has approximately a million config options, (which is awesome!), but I couldn't find one that fixes this behavior. I can't say it doesn't exist though, so yellow for you!

Starring

Different apps call it different things. Favorite, Like, Star, etc. Subsonic refers to it as star so that's what I'll call it. Starring is just one of the few ways that you can categorize your music and I want to be able to use it!

Ultrasonic: You can't star artists, not a big deal, but everyone else managed to do it right.

Sharing

If your server supports it you can share your music with other people even if they don't have an account by sharing a special link with them. Some apps have a way to get these links from the server natively. This is pretty nice to have so that I don't have to log into Navidrome's web UI to share music.

YouAMP: Doesn't support sharing

Tempo: No config options when sharing

subtracks: Doesn't support sharing

DSub2000: No config options when sharing

Rating

Rating is similar but distinct from starring. Starring has two states, starred or unstarred. Rating has 6 states, 1-5 stars or 0 stars which represents unrated. I listen to a lot of music that doesn't make it easy to remember the titles of songs. Having a way to rank music gives me a way to easily choose between my favorites, mostly good stuff, or stuff I haven't yet rated depending on how I'm feeling.

Tempo: Only supports rating individual songs

DSub2000: Only supports rating whole albums

It is possible to rate artists in subsonic, but none of these apps support it.

Intuitive Interface

The meaning is obvious, but what makes an Intuitive Interface depends on the person and which features they're trying to use. This is just a subjective measure of how many times I had trouble finding something, or accidentally took an action I didn't mean to. Every interface has it's quirks, but some are truly strange.

Ultrasonic: The symbols don't have obvious meanings until you click around the first time to memorize them. When you're on an album there's a play button up in the top right which plays the whole album which isn't the place that I expect the play button to be (bottom-center). It gets even more confusing when you have a song selected in the same menu. In this case, there's two play buttons that each do different things, top right plays the album, bottom left plays the selected songs. I think it's not a great design that you can end up in this confusing situation.

Tempo: Tempo's interface is mostly intuitive, but the media library page is very strange. There's basically not options in it other than to look around by folder and play individual songs. There's albums listed, and yet you can only play songs... Weird. If you ignore that page it's pretty understandable.

DSub2000: My main complaint here is with the "Now Playing" page. I think the below gif (with director commentary) will illustrate why.

I start by opening what I would call the "now playing" screen by clicking on the bottom bar, fair enough... But then it gets weird already. I tap on the album art, I'm not exactly sure what I think this should do (probably nothing?), but it takes me to the queue.. Interesting. The list-with-music-note button is still on the bottom right though, that usually is the button to show you the queue. What happens if I click that? Brings you back to the album art. K... I guess that's not thaaaat weird, but I don't love that the symbol for go to the queue and return from the queue is the same. Then I tap the back arrow on the top left and that brings me back to where I was, good stuff.

Okay, so that's not too bad, but it get's more confusing. I open the "now playing" screen again to the album art, tap the album art to see the queue and now I want to go back to the album art. So what do I do? I click the back arrow! But as previously discussed, that takes you back to the previous screen. Not the previous screen in this context, nonono, it's the previous screen where we were selecting songs from the album (where the video started), but they look almost identical and have almost identical long press and triple dot menu options, but not actually identical!

Not ideal so far, but what else could there be on one screen? Let's open up the "now playing" screen one more time. The three dots in top left might have something interesting tap. Hmmmm "Remove all"... Sounds like it'll remove all the songs from the queue. Too bad I can't see any of them from this screen. "Exit", what does that do? Use your buzzer to guess now

- Just close the menu

- Close the "now playing screen" and return to the album view

- Close the whole app

- Stop playing the current song

Watch the gif to find out.

Ah of course, it closes the whole app. That probably makes the most sense of those options, but why is that even a menu option? The worst part is that "Exit" is only there on the triple dot menu on the "now playing" screen! This is the album view with the triple dot menu open:

I don't know what to say...

Download Songs

This is the ability to download songs while online and play them back later when you're not connected to the internet. I think that most people desire this feature more than I do, because it's listed as one of the highlighted features in every app that supports it. I think I've used it one time in the last 3 years. It's nice to have in a pinch though.

Mix/Radio/Autoplay function

This is the ability to generate a queue on the fly of similar songs. There's a few different implementations that are more or less the same for me. You can add songs to the queue after all the manually queued ones, you can offer a "radio" or "mix" button which will play similar songs, anything like that would work for me really. I don't really need this, but sometimes when I'm in the car I don't want to queue up more stuff and would rather just let the app decide.

Tempo: I didn't try this extensively, but it seems to only play songs by the same artist, which is nice I guess? But I could just shuffle a few albums around and get similar results, I want a little more than that. It technically does have the feature though, so light green for you.

DSub2000: I thought they didn't have an option at first, but when I dug around a little I noticed a radio icon when you're looking at an artist. Cool! "They can't generate it from albums, but at least they have something" I thought to myself. Then I clicked it and it brings me to the "Now Playing" screen with nothing in the queue. Everytime. I don't know if this is a feature in progress or what, but it doesn't work, so it doesn't count.

Seamless Offline Playback

My desire for this comes from my experience with offline playback in Ultrasonic. To do offline playback in Ultrasonic you need to switch servers to the "offline" server. This was very unintuitive to me and also meant that you can't switch back the online mode without more manual intervention. The idea here is to only show the songs that are available to you at any given time. If you lose connectivity then the available songs are just the ones that you have downloaded currently, and when you come back online you see the full library again. Unfortunately for me, this was just a dream feature, because no app I tested supported it. :(

Conclusions!

I think that someone could choose Tempo or DSub2000 and have that be a good choice for them, but I think Tempo is going to be the one for me. I'll probably also try out DSub2000 for a little while too just to make sure I'm not missing out.

It's pretty close overall, but I like the UI a lot more on Tempo and DSub2000

has that one killer bug(?) with the lock screen widget. The features that I'm

missing from Tempo seem like they could be easy enough to implement myself, (in

theory without actually looking at their code yet haha), so if I just need to

add a few buttons I can do try that, and I certainly can't overhaul the UI of

DSub2000, so even if Tempo was a little worse I'd have more options with Tempo

anyways.

It's worth noting that Tempo hasn't been actively maintained in 8 months or so, but some forks have popped up to try to keep prs flowing. I tried eddyizm/tempo for just a little while and noticed that they added some more buttons to the lock screen widget, but no star option yet. At least changes are coming in here to this one.

Unless something changes I don't think the other three are going to do it for me. If you're looking for a different set of features, then you might like a different application more. For instance, YouAMP is very simple and hard mess things up. It just doesn't work out with the way I like to listen to music. Subtracks can't queue songs the way I want either, so that one's out. All that's left is Ultrasonic which I've been using for a couple years, but that why I'm here - I'm not satisfied with it.

If you know about any clients I haven't tested, or if I've made a mistake in my evaluation of these features let me know by email at jeff@blackolivepineapple.pizza :)

Thrice (demo)

Installing voidlinux on a RaspberryPi

To install voidlinux on a Pi we'll have to do a chroot install. For official documentation on installing from chroot for void see here.

We need to install via chroot because the live images are made specifically for 2GB SD cards.

"These images are prepared for 2GB SD cards. Alternatively, use the ROOTFS tarballs if you want to customize the partitions and filesystems."

The installation can split out into 4 rough steps

- Partition the disk (SD card in my case) you want to install void on

- Create the filesystems on the disk

- Copy in the rootfs

- Configure the rootfs to your liking

Prerequisites

Because we're going to be creating an aarch64 system you'll need some tool that will allow you run aarch64 binaries from a x86 system. To accomplish this we'll need the binfmt-support and qemu-user-static packages. To install them you can run

sudo xbps-install binfmt-support qemu-user-static

We'll also need to enable the binfmt-support service. To do this, run

sudo ln -s /etc/sv/binfmt-support /var/service/

Now you're one step away from being able to run aarch64 binaries in the chroot on your x86 system, but we'll get to that later.

Partition the disk you want to install void on

This is tricky because it can depend a little based on what you want to do. In my case I didn't allocate any swap space and kept the home directory on the root partition which keeps things pretty simple.

In this case we're going to need two partitions. One 64MiB partition that is marked with the bootable flag and has the vfat type (0b in fdisk). And the other that takes up the rest of the SD card with type linux (83 in fdisk).

To create these partitions with fdisk run sudo fdisk /dev/sda where /dev/sda is the path to your disk. The path to your disk can be found running lsblk before and after plugging in the disk and seeing what shows up. Once fdisk drops you into the repl you can delete the existing partitions with the d command.

Create the boot partition

Make a new partition with the n command, make it a primary partition with p, make it partition 1, and leave the first sector blank, which will keep it as the default. For the last sector put +64M which will give us a 64MiB partition (if you're asked to remove the signature it doesn't matter because we'll be overwriting that anyway). Use the a command to mark partition 1 bootable and lastly use the t command to make partition 1 type 0b, which is vfat.

Create the root partition

Now the root partition, use n to make a new partition, then leave everything else default. This will consume the rest of the disk for this partition. Same as before, if it asks you to remove the signature it doesn't matter because we'll be overwriting now. To set the type label use the t command and set it to type 83 which is the linux type.

That's all we need to do to setup the partitions. Make sure to save your changes with the w command!

The disk should be correctly partitioned now!

Create the filesystems on the disk

This part is easy. Assuming the device is located at /dev/sda, partition 1 is the boot partition, and partition 2 is the root partition, just run these two commands.

mkfs.fat /dev/sda1 # Create boot vfat filesystem

mkfs.ext4 -O '^has_journal' /dev/sda2 # Create ext4 filesystem on the root partition (with journaling)

Copy in the rootfs

For this step we'll need both partitions we set up earlier to be mounted. To mount the partitions run

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt

mount /dev/sda2 $MOUNT_PATH # Mount the root partition to the mount point

mkdir -p $MOUNT_PATH/boot # Create a directory named "boot" in the root partition

mount /dev/sda1 $MOUNT_PATH/boot # Mount the boot partition to that boot directory

Now we just need to extract the rootfs into our mount point.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

ROOTFS_TARBALL='/home/me/Downloads/void-rpi3-PLATFORMFS-20210930.tar.xz' # Replace with the path to the tarball you download from https://voidlinux.org/download/

# x - Tells tar to extract

# f - Tells tar to operate on the file path given after the f switch

# J - Tells tar to extract using xz, which is how the rootfs happens to be compressed

# p - Tells tar to preserve permissions from the extracted directory

# -C - Tells tar where to extract the contents to

tar xfJp $ROOTFS_TARBALL -C $MOUNT_PATH

That's it for this step! You might notice that we didn't explicitly copy anything into the $MOUNT_PATH/boot directory. The rootfs provided by void contains a /boot directory which will get placed into the $MOUNT_PATH/boot directory when we extract the tarball.

Configure the rootfs to your liking

This step is technically optional. If we just wanted to get a system up and running, we could plug the SD card in right now and it would boot up. We wouldn't have any packages (including base-system, which gives us dhcpcd, wpa_supplicant and other important packages), but it would boot. Additionally, the RaspberryPi's (at least mine) doesn't have a hardware clock so without an ntp package we won't be able to validate certs (because the time will be off) which prevents us from installing packages.

Some of the things we want to configure are most easily through a chroot. The problem is that the binaries in the rootfs we copied over are aarch64 binaries.

Running aarch64 binaries in the chroot

Because your x86 system cannot run aarch64 binaries we need to emulate the aarch64 architecture inside the chroot. To accomplish this we copy an x86 binary that can do that emulation for us into the chroot, and then pass all aarch64 binaries through it when we go to run them.

If you've installed the qemu-user-static package you should have a set of qemu-*-static binaries in /bin/. For a RaspberryPi 3, we want qemu-aarch64-static. Copy that into the chroot.

cp /bin/qemu-aarch64-static <your-chroot-path>

Now you're ready to run the aarch64 binaries in your chroot.

Recommended configuration

To create a usable system there's a few things we need to setup that are somewhere between recommended and mandatory; the base-system package, ssh access, ntp, dhcpcd and a non-root user.

Because running commands in the chroot is slightly slower due to the aarch64 emulation we'll try to setup as much of the rootfs as possible without actually chrooting.

First we should update all the packages that were provided in the rootfs.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

# Run a sync and update with the main machine's xbps pointing at our rootfs

env XBPS_ARCH=aarch64 xbps-install -Su -r $MOUNT_PATH

The base-system package

Just install the base-system package from your machine with the -r flag pointing at the $MOUNT_PATH.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

# Install base-system

env XBPS_ARCH=aarch64 xbps-install -r $MOUNT_PATH base-system

ssh access

We just need to activate the sshd service in the rootfs.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

ln -s /etc/sv/sshd $MOUNT_PATH/etc/runit/runsvdir/default/

There's two thing here that look odd; 1. we're symlinking to our main machines /etc/sv/sshd directory and 2. we're placing the symlink in /etc/runit/runsvdir/default/ instead of /var/service like is typical for activating void services.

- When we're

chroot'ed in, or when the system is running on the Pi/etc/sv/sshdwill point to the Pi'ssshdservice. /var/servicedoesn't exists until the system is running and it when the system is up/var/servicewill be a series of symlinks pointing to/etc/runit/runsvdir/default/so we can just link thesshdservice directly to the/etc/runit/runsvdir/default/.

For security reasons I recommend disabling password authentication.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

sed -ie 's/#PasswordAuthentication yes/PasswordAuthentication no/g' $MOUNT_PATH/etc/ssh/sshd_config

sed -ie 's/#KbdInteractiveAuthentication yes/KbdInteractiveAuthentication no/g' $MOUNT_PATH/etc/ssh/sshd_config

npd

We need an ntp package because the RaspberryPi doesn't have a hardware clock so when we boot it up the time will be January 1, 1970 which causes cert failures resulting in certificate validation failures that prevent us from installing packages and more.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

env XBPS_ARCH=aarch64 xbps-install -r $MOUNT_PATH openntpd

ln -s /etc/sv/openntpd $MOUNT_PATH/etc/runit/runsvdir/default/

Same as before we just install the package with our local xbps package manager pointing to the chroot and then setup the package to run at the end of symlink chain.

dhcpcd

The base-system package should have covered the install of dhcpcd, so all we have to do is activate the service. Like before, we'll symlink directly to the end of the symlink chain.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

ln -s /etc/sv/dhcpcd $MOUNT_PATH/etc/runit/runsvdir/default/

A non-root user

This probably depends on your use-case, but having everything running as root is usually bad news, so setting up a non-root user which we can ssh in as is probably a smart idea.

This is the first part of the configuration that is truly best done inside the chroot, so make sure you have the filesystem mounted and have copied the qemu-aarch64-static binary into chroot.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

# After executing this command all subsequent commands will act like

# you're running on Pi instead of your main machine

chroot $MOUNT_PATH

USERNAME='me' # Replace with your desired username

groupadd -g 1000 $USERNAME # Create our user's group

# Add our user and add it to the wheel group and our personal group

# Depending on your needs you could additionally add yourself to

# other default groups like: floppy, dialout, audio, video, cdrom, optical

useradd -g $USERNAME -G wheel $USERNAME

# Set our password interactively

passwd $USERNAME

sed -ie 's/# %wheel ALL=(ALL) ALL/%wheel ALL=(ALL) ALL/g' $MOUNT_PATH/etc/sudoers # Allow users in the wheel group sudo access

At this point the root account's password is still "voidlinux". We wouldn't want our system running with the default root password, so to remove it run

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

chroot $MOUNT_PATH # Run this if you're not in the chroot

passwd --delete root

If you set up ssh access and disabled password authentication you'll want to add your ssh key to the rootfs.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

USERNAME='me' # Replace with your desired username

mkdir $MOUNT_PATH/home/$USERNAME/.ssh

cat /home/$USERNAME/.ssh/id_rsa.pub > $MOUNT_PATH/home/$USERNAME/.ssh/authorized_keys

Clean up

According to the void docs we should remove the base-voidstrap package and reconfigure all packages in the chroot to ensure everything is setup correctly.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

chroot $MOUNT_PATH

xbps-remove -y base-voidstrap

xbps-reconfigure -fa

Now that we're done in the chroot we can delete the qemu-aarch64-static binary that we put in there.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

rm $MOUNT_PATH/bin/qemu-aarch64-static

That's it!

Make sure to unmount the disk before removing it from your machine because we wrote a lot of data and that data might not be synced until we unmount it.

MOUNT_PATH='/mnt/sdcard' # Replace with any path to an empty directory. By convention it would be in /mnt (same mount path as above)

umount $MOUNT_PATH/boot

umount $MOUNT_PATH

Lastly, with some care, a lot of these steps can be combined. To see what that might look like check out this repo

Now you should be able to put the SD card into the Pi, boot it up and have ssh access!

Tilemaps with data

How and why you might want tilemaps that have data associated with the tiles in Godot.

Problem: You have tiles that need to track some state

In my case I wanted to have tiles that were could be destoryed after multiple hits.

There's three ways I considered doing this:

- Don't use a

TileMap, just useNodes withSprite2Ds attached and have some logic that makes sure they are placed on a grid, as if they were rendered with aTileMap - Extend the

TileMapclass and maintain aDictionaryofVector2 -> <Custom class> - (The option I went with) Extend the

TileMapclass and maintain aDictionaryofVector2 -> <Node with a script attached>

Options 2 and 3 are very similar one might be better than the other depending on the use case.

extends TileMap

export(PackedScene) var iron_ore

# This holds references to the nodes so we

# can access them with TileMap coordinates

var cell_data : Dictionary = {}

# Called when the node enters the scene tree for the first time.

func _ready():

# Create 10 ores in random locations on the tilemap

for x in range(10):

var node = spawn_ore()

var cell = world_to_map(node.position)

set_cellv(cell, node.id)

cell_data[cell] = node

func spawn_ore():

# This iron_ore Node has no sprites attached to it

# it's just a Node that holds a script which contains

# helper functions

var node = iron_ore.instance()

var width = 16

var height = 16

var x = randi() % 30

var y = randi() % 30

add_child(node)

node.position = Vector2(x * 16 + width / 2, y * 16 + height / 2)

return node

# This function deals with the player hitting a tile

# when a player presses the button to swing their pickaxe

# they call this function with the tilemap coords that their aiming at

func hit_cell(x, y):

var key = Vector2(x, y)

# Check if that cell is tracked by us

if cell_data.has(key):

# Note: cell_data[key] is a Node

cell_data[key].health -= 1

# If the ore is out of health we destory it

# and clean it up from our cell_data map

if cell_data[key].health == 0:

# Set the tiles sprite to empty

set_cell(x, y, -1)

# Destory the Node

var drops = cell_data[key].destroy()

# Get drops from the ore

for drop in drops:

add_child(drop)

# Clean up the cell_data map

cell_data.erase(key)

return true

return false

This is the script attached to the Nodes we reference in the TileMap

extends Node2D

# The chunk that's dropped after mining this ore

export(PackedScene) var iron_chunk

const id: int = 0

var health: int = 2

func destroy():

var node = iron_chunk.instance()

node.position = position

queue_free()

return [node]

What does this actually do?

When the player mines the ore you can see that the nodes in the remote scene view (on the very left) are replaced with an iron chunk.

This is the iron chunk generated from destory() in iron_ore.gd.

After the player picks up the iron chunk it's gone for good.

Why is this better than cutting out the TileMap and using Node2D directly?

- It allows us to have the rendering logic handled by a

TileMapwhich means that our ore can't be placed some where it shouldn't be. TileMaps tend to be slightly more optimized for rendering. I don't know about Godot specifically, but this probably has some minor performance benifits. Although, this is probably irrelvent for my case.- We still get all the benefits of having

Nodes, because the tiles are backed by actualNodeinstances.

Why is this better than using a custom class rather than a Node

Here's what a class that might look like:

class IronOre:

const id: int = 0

var health: int = 2

var iron_chunk: PackedScene

func destroy():

var node = iron_chunk.instance()

node.position = position

queue_free()

return [node]

func _init(chunk):

iron_chunk = chunk

# We could remove the need to pass in chunk

# if we loaded the chunk scene with a hardcoded string

# load("res://iron_ore.tscn")

Notice that it's basically the same as iron_ore.gd.

We'd use IronOre.new(iron_chunk) instead of iron_ore.instantiate() to create it, but that's not necessarily a problem.

Where this does run into issues is with getting the iron_chunk reference.

When using the class we need to load the PackedScene somehow, and this could be done by hardcoding it in.

i.e. load("res://iron_ore.tscn"), this would remove the need for the _init(chunk) constructor.

Or we could export a varible in our TileMap which is then passed through when we instantiate the IronOre class like this.

extends TileMap

# Notice this is iron_chunk (the thing that iron_ore drops), _not_ iron_ore (the thing that a player mines)

export(PackedScene) var iron_chunk

...

func spawn_ore():

# Pass the iron_chunk PackedScene through

var node = IronOre.new(iron_chunk)

var width = 16

var height = 16

var x = randi() % 30

var y = randi() % 30

...

This works, but if we need to pass in more PackedScenes to IronOre we'll have to export those through the TileMap.

And if we introduce more types of ore, we'll have to export even more variables through the TileMap.

The worst part of this is that these scenes don't have anything to do with the TileMap.

On the other hand, by having Nodes be the backend we can use the editor to drag-and-drop the correct chunk for each ore scene.

We still have to export a variable in the TileMap for each ore type, but that's it!

Why is this worse than the other options?

There are some trade-offs we make by using this method.

- We have to maintain the node tree and keep that in sync. With the class method we'd have to ensure we free our memory, and this has the same issue. Everytime we create a node we need to

queue_freeit if we remove it. - We have two ways to refer to the "position" of the ore. The

Nodehas a position and we have a position which acts as a key for the dictionary. TheNodeposition should never be used, so it doesn't have to be kept in sync, but you need to make sure you never use it.

Another strategy?

While writing this I thought it might be possible to get the best of both worlds by using Resources instead of Nodes to hold the state.

I think this might give us all the ability to

- Call functions, hold data, and be seperate from the TileMap file (both methods have this already)

- Edit variables from the editor (like the

Nodemethod can do) - Cut out the need to manage

Nodes in the node tree, which could reduce clutter (like the class solution can do).

I'm not totally sure if 3 is possible, but this seems worth investigating!

Setting up an nfs server for persistent storage in k8s

These are some helpful tips I found when trying to set up an nfs for persistent volumes on my k8s cluster. Setting up the actual persistent volumes and claims will come later.

Prerequisites

Some of the specifics of these tips (package names, directories, etc.) are going to be specific to voidlinux which is the flavor of linux I'm running my nfs on. There is almost certainly an equivalent in your system, but the name may be different.

tl;dr

- Make sure you have the

statd,nfs-server, andrpcbindservices enabled on the server. - Use

/etc/exportsto configure what directories are exported. - Run

exportfs -rto make changes to/etc/exportsreal.

Setup

Actually setting up the nfs is pretty easy.

Just install the nfs-utils package and enable the nfs-server, statd, and rpcbind services.

That's it.

Configuration

Now that you have an nfs server you need to configure which directories are available for a client to mount.

This is done through the /etc/exports file.

I found this site to be quite useful in explaining what some of the options in /etc/exports are and what they mean.

Specifically, debugging step 3 (setting the options to (ro,no_root_squash,sync)) was what finally got it working for me when I was receiving mount.nfs: access denied by server while mounting 192.168.0.253:/home/jeff/test.

My /etc/exports file is just one line:

/watermelon-pool 192.168.0.0/24(rw)

-

/watermelon-poolis the path to my zfs pool which is where I store this kind of data. -

192.169.0.0/24is the network prefix that my machines are in. -

(rw)allows those machines to read and write to the nfs

After you make changes to /etc/exports make sure to run exportfs -r.

exportfs -r rereads the /etc/exports and exports the directories specified in /etc/exports.

Essentially, you need to run it every time you edit /etc/exports.

For some reason I had issues when not specifying the no_root_squash option for some directories.

I still don't have a good answer for what's up with that, but you can read my (still unanswered) question on unix stack exchange if you want.

This didn't effect my ability to use this nfs server as a place for persistent storage for kubernetes though.

It seemed to be a void specific bug that only effects certain directories (specifically my home directory), but I'm still not sure.

Read the docs

Unsurprisingly the voidlinux docs on setting up an nfs server on voidlinux were pretty helpful, who knew? There are a few pretty non-obvious steps when setting up an nfs on void. Notably you have to enable the rpcbind, and statd services on the nfs server in addition to the nfs-server service.

Errors I received and how I fixed them

- Command:

showmount -e 192.168.0.253

Received: clnt_create: RPC: Program not registered

Fix: Start statd service on server

- Command:

showmount -e 192.168.0.253

Received: clnt_create: RPC: Unable to receive

Fix: Start rpcbind service on server

- Command:

sudo mount -v -t nfs 192.168.0.253:/home/jeff/test nas/

Received: mount.nfs: mount(2): Connection refused

Fix: Start rpcbind service on server

- Command:

sudo sv restart nfs-server

Received: down: nfs-server: 1s, normally up, want up

Fix: Start rpcbind and statd services on server

- Command:

sudo mount -v -t nfs 192.168.0.253:/home/jeff/test nas/

Received: mount.nfs: mount(2): Permission denied

Random tips

- Make sure the

nfs-serverservice is actually up.

sv doesn't make this super clear in my opinion.

For example this means everything is good

> sudo sv restart nfs-server

ok: run: nfs-server: (pid 9446) 1s

while this means everything is broken

> sudo sv restart nfs-server

down: nfs-server: 1s, normally up, want up

Not quite as different I would like :/

If you find that your nfs-server service isn't running it might be because you haven't enabled the statd and rpcbind services.

- You can mount directories deeper than the exported directory.

For instance, if you put /home/user * in /etc/exports you can mount /home/user/specific/path assuming /home/user/specific/path exists on ths nfs server like this:

sudo mount -t nfs4 192.168.0.253:/home/user/specific/path /mnt/mount_point

Adding a new node to the cluster

This is a guide on adding a new raspberry pi node to your k3s managed kubernetes cluster.

tl;dr

- Write Raspberry Pi OS to an sd card. Found here

- Boot er up

- ssh in and configure

- Install k3s

Slightly more detailed version

- Write Raspberry Pi OS to an sd card

- Download Raspberry Pi OS Found here

- Unzip it:

unzip 2020-08-20-raspios-buster-armhf-lite.zip - Copy image to SD card:

sudo dd if=/path/to/raspberryPiOS.img of=/dev/sdX bs=4M conv=fsync(where /dev/sdX is the SD card device) - Mount SD card:

sudo mount /dev/sdX /mnt/sdcard(/mnt/sdcardcan be any empty directory) - Add "ssh" file to filesystem which causes the ssh server to start on boot:

sudo touch /mnt/sdcard/ssh - Unmount it:

sudo umount /mnt/sdcard

- Boot 'er up

- Put the SD card in the pi

- Plug in the pi

- Give it a minute or two

- ssh in and configure

- ssh in:

ssh pi@raspberrypipassword is "raspberry" - Update and install vim and curl:

sudo apt update && sudo apt upgrade -y && sudo apt install -y vim curlAlthoughvimisn't strictly necessary andcurlis on the image by default, I like vim and we'll use curl later so better to make sure it's already there. - Make yourself a user:

sudo useradd -m -G adm,dialout,cdrom,sudo,audio,video,plugdev,games,users,input,netdev,gpio,i2c,spi jeffadm,dialout,cdrom,sudo,audio,video,plugdev,games,users,input,netdev,gpio,i2c,spiare groups that you are adding your user to. The only super important one is probablysudo. This is the list that the defaultpiuser starts in so might as well.

- Create a

.sshdirectory so you can get in to your user:sudo -u jeff mkdir .ssh- We use

sudo -u jeffhere so that it runs as the jeff user and makesjeffthe owner by default

- We use

- Slap your public ssh keys into the authorized_keys file:

sudo -u jeff curl https://github.com/ToxicGLaDOS.keys -o /home/jeff/.ssh/authorized_keysHere we curl the key down from a github account straight into the authorized_keys file. If your keys aren't on github you mightscpthem onto the pi. - Change the hostname of your machine by editing the

/etc/hostsand/etc/hostnamefiles. This can be done manually or with some handysedcommands.sudo sed -i s/raspberrypi/myHostname/g /etc/hostssudo sed -i s/raspberrypi/myHostname/g /etc/hostname

- Disable password authentication into the pi (optional, but pretty nice)

- Manually: Open

/etc/ssh/sshd_configand edit the line that says#PasswordAuthentication yesso it saysPasswordAuthentication no. If this line doesn't exist add thePasswordAuthentication noline. - Automatic (relies on commented version being there):

sudo sed -i s/#PasswordAuthentication\ yes/PasswordAuthentication\ no/g /etc/ssh/sshd_config

- Manually: Open

- Allow passwordless

sudo:echo 'jeff ALL=(ALL) NOPASSWD:ALL' | sudo tee -a /etc/sudoersThis is a little dangerous, because if your account on the machine gets comprimised then an attacker could run any program as root :(. Also if you fail to give yourself passwordlesssudoaccess and restart the pi you can end up being unable tosudoat all which means you can't access/etc/sudoersto give yourselfsudoaccess... So you might end up having to re-imaging the SD card cause you're boned. Not that that has happened to me of course... :( - Delete the default pi user:

sudo userdel -r pi

- ssh in:

- Install k3s

curl -sfL https://get.k3s.io | K3S_URL=https://masterNodeHostname:6443 K3S_TOKEN=yourToken sh -This pulls down a script provided by k3s and runs it so maybe check to make sure k3s is still up and reputable. Make sure to replace masterNodeHostname and yourToken with your values. masterNodeHostname is the hostname of the master node in your cluster (probably the first one you set up), in my case it'sraspberry0. yourToken is an access token used to authenticate to your master node. It can be found on your master node in the/var/lib/rancher/k3s/server/node-tokenfile. Read more at k3s.io.

That's basically it!